In the digital realm, creating three-dimensional worlds has long been like sculpting smoke — the shapes exist, but capturing their true essence, texture, and light is elusive. Neural Radiance Fields, or NeRFs, emerged as the brush that finally paints with light. Instead of modelling surfaces, NeRFs reconstruct how every ray of light behaves within a scene. It’s not just a leap in computer vision — it’s a new language of seeing.

From Pixels to Perception: How NeRF Redefines Vision

Imagine standing in a cathedral, light scattering through stained glass, casting rainbow patches on the stone floor. Traditional 3D models would struggle to capture this dance of colour and depth. NeRF, however, learns the entire performance — it encodes how every point in space glows and reflects.

Using a deep neural network, NeRF takes multiple 2D images of a scene from different viewpoints and learns to predict the colour and density of light at any 3D coordinate. When you ask it to render a new view, it doesn’t just stitch together pictures — it recreates how light would behave if you actually moved your head. This capacity to synthesise unseen perspectives makes NeRF a turning point for immersive technology.

It’s no surprise that those pursuing a Generative AI course in Hyderabad are diving into NeRFs to understand how deep learning can interpret and generate reality itself.

The Secret Ingredient: Light as Data

NeRFs treat light as both painter and subject. Instead of defining objects as geometric meshes or voxels, they represent a continuous volumetric field — a mathematical function that maps coordinates to colour and opacity. The neural network learns this function through a process called volumetric rendering.

Every ray of light passing through the virtual space is sampled thousands of times, accumulating colour and density along its path. The result? A photo-realistic reconstruction that’s not a mere copy of the original scene, but a dynamic understanding of it.

When this model is trained, it can generate new images from any angle — as if the computer has developed a memory of the scene. For industries experimenting with AR, VR, and digital twins, this is a goldmine. And within research circles, particularly in advanced labs and institutions offering a Generative AI course in Hyderabad, NeRFs are now the backbone of next-gen visual synthesis modules.

Neural Alchemy: Turning 2D Inputs into 3D Magic

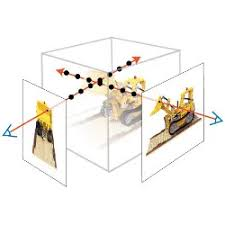

At first glance, the process may seem like wizardry. You provide NeRF with a handful of 2D photographs, each tagged with the camera’s angle and position. The network then iteratively learns how these images interrelate spatially, building a latent 3D model — a “mental map” of the scene.

Through training, the neural net refines its parameters to minimise the difference between its predicted images and the real ones. Gradually, it discovers how every pixel belongs in 3D space. This approach is both elegant and efficient because it leverages deep learning to compress a world of visual complexity into a few megabytes of model weights.

The beauty of NeRF lies in its abstraction — it doesn’t “know” about objects, edges, or depth in the conventional sense. Instead, it learns how light behaves, and from that, reality emerges.

Applications: From Virtual Heritage to Synthetic Reality

The potential applications of NeRF stretch far beyond academic curiosity. In digital preservation, archaeologists use it to recreate historical sites in full 3D from limited photographs. Film studios employ NeRFs to capture detailed 3D environments without cumbersome photogrammetry rigs. Game developers use them to craft hyper-realistic environments that respond naturally to player movement and light.

Even in robotics, NeRF helps machines “see” their surroundings more intuitively. By allowing AI systems to reconstruct the world as a continuous field, autonomous drones and robots can navigate with precision and realism.

For instance, imagine virtual classrooms where learners can walk through reconstructed ecosystems or engineering designs visualised in real-time. In cities like Hyderabad, where AI innovation is booming, educators and technologists are already exploring these applications within advanced neural rendering modules and training programmes.

The Challenges Behind the Brilliance

Despite its wonder, NeRF comes with computational challenges. Training a NeRF model traditionally required hours or even days, as every pixel in every image must be sampled multiple times. However, newer variants — such as Instant-NGP (Neural Graphics Primitives) — dramatically reduce this time, bringing real-time rendering closer to reality.

Researchers are also exploring dynamic NeRFs that can handle moving objects, semantic NeRFs that understand scene components, and relightable NeRFs that adjust lighting conditions. As these advances unfold, the boundaries between captured and imagined worlds continue to blur.

The rise of these techniques marks a profound shift — from capturing images to capturing experiences.

Conclusion: The Light Learners of the Digital Age

Neural Radiance Fields represent more than a technological leap; they’re a philosophical one. For decades, computers tried to approximate the world. Now, through NeRF, they’re beginning to understand how light sculpts it. The line between vision and imagination grows faint — just as in the human mind.

As creative industries and researchers continue to harness NeRF’s potential, one truth becomes clear: the future of visual synthesis isn’t about pixels or polygons, but perception itself. And for anyone seeking to master this frontier, understanding NeRF is the first step toward teaching machines how to see — not as we code them to, but as we do.